Development Board

Q: Why are the output data of network models operated on board different from those operated on PC?

A: For both fixed and offline models, the data output from PC and the data output from development board should be identical as long as the same input settings are used. You can use the following method to verify this:

Use simulator.py for simulation on PC, and add the parameter --dump_rawdata when operating Fixed or Offline model. When the operation is done, a file with the filename image name + .bin will be created under the current path. This file is the input data preprocessed and saved in binary data format on PC. Read the file on the board and copy all data within by memcpy to the input Tensor of the model. Then execute MI_IPU_Invoke. The output obtained this way would be one and the same as the output shown on PC.

Q: Why is the dimension of the output data different from the dimension seen while running the network model on the board?

A: For both fixed and Offline models, if dequantizations in input_config.ini is set to FALSE, the final dimension of the output data will be aligned upwards, due to the principle of data write in hardware. Hence, this phenomenon will occur whether the model is simulated on a PC or actually run on a board. If you set -c in simulator.py to Unknown, the following prompt will appear in the output file:

layer46-conv Tensor: { tensor dim:4, Original shape:[1 13 13 255], Alignment shape:[1 13 13 256] The following tensor data shape is alignment shape. tensor data: ...

Wherein, alignment shape is the data dimension actually output by the model. The result of numpy.ndarray data shape will also be aligned when you use calibrator_custom.fixed_simulator to create and invoke an instance of fixed-point model and call get_output to get the result.

>>> print(result.shape) (1, 13, 13, 256)

To remove useless data, please refer to calibrator_custom.simulator for details.

Q: Is the output from operation on the board floating-point type or fixed-point type?

A: The operation result on the board is related to the input_config.ini configuration used during network conversion. When dequantizations is configured as TRUE, the corresponding Tensor output on the board is floating point data. To view the data type of the output Tensor, you can check through the MI_IPU_GetInOutTensorDesc interface.

Q: How to check the usage of the memory occupied by the model during runtime?

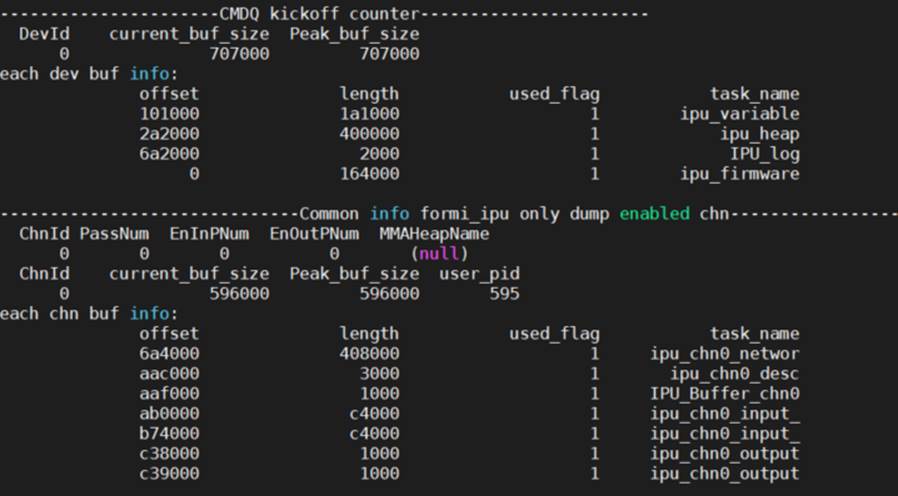

A: To view the memory usage status, check the parameters below:

cat /proc/mi_modules/mi_ipu/mi_ipu0

- ipu_variable: memory used temporarily in the inference process of all network models

- ipu_heap: heap used by malloc in IPU firmware

- IPU_log: memory used to save log

- ipu_firmware: memory used to operate IPU firmware

- ipu_chn0_networ: memory used to load channel 0 network model

- ipu_chn0_desc: memory used for channel 0 network descriptor

- IPU_Buffer_chn0: memory used temporarily by channel 0

- ipu_chn0_input: memory used by channel 0 input tensor (multiple input tensors can be used at the same time if there are multiple values)

- ipu_chn0_output: memory used by channel 0 output tensor (multiple output tensors can be used at the same time if there are multiple values)