Using SGS_IPU_SDK to Build a Model

Operator Details¶

Operator Summary¶

The table below provides detailed descriptions of the operators available from SGS_IPU_SDK, in which BuiltinOperator, BuiltinOptions and CustomCode are parameters required to build operators using SGS_IPU_SDK. In the Input/Output column, "Tensor" means the input/output supports multiple dimensions and "Scalar" means the input/output supports only one entry of data. If the Input column is "Variable", it means the input can only be a variable; if the Input column is "Variable/Const", it means the input can be a variable or a constant; if the Input column is "Const", it means the input can only be a constant. The Tensor has different configurations for Variable and Const, details of which will be described later. To view the SDK version information, input the following in the terminal:

python3 -c "import calibrator_custom; print(calibrator_custom.__version__)"

Operators:

| Operator | Input | Output | BuiltinOperator | BuiltinOptions | CustomCode | Note | |-----------------------|-------------------------------------------|--------------------------------------|-------------------------|------------------------------|----------------------|---------------------------------------------------------------------------------------------------------------------------------------------------| | Abs | Input0: Tensor(Variable) | Output0: Tensor(Variable) | ABS | AbsOptions | None | | | Add | Input0: Tensor(Variable) | Output0: Tensor(Variable) | ADD | AddOptions | None | If Input1 is Const, the value type will become INT32 after quantization. | | | Input1: Tensor(Variable/Const) | | | | | | | ArgMax | Input0: Tensor(Variable) | Output0: Tensor(Variable) | ARG_MAX | ArgMaxOptions | None | | | | Input1: Scalar(Const: axis) | | | | | | | AvgPool | Input0: Tensor(Variable) | Output0: Tensor(Variable) | AVERAGE_POOL_2D | Pool2DOptions | None | Input and output have the same quantization range. | | Concatenation | Input(0 ~ N): Tensor(Variable) | Output0: Tensor(Variable) | CONCATENATION | ConcatenationOptions | None | Input and output have the same quantization range. | | Conv2D | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CONV_2D | Conv2DOptions | None | Limitation: | | | Input1: Tensor(Variable/Const: weights) | | | | | Pad range: [0, 7] | | | Input2: Tensor(Const: bias) | | | | | Input1: H * W \< 255 | | DepthwiseConv2D | Input0: Tensor(Variable) | Output0: Tensor(Variable) | DEPTHWISE_CONV_2D | DepthwiseConv2DOptions | None | The kernel_size natively supported is 3*3 (1.x) / 3*3, 6*6, 9*9 (others). For unsupported sizes, Conv2D will be performed instead. | | | Input1: Tensor(Variable/Const: weights) | | | | | Limitation: Pad range: [0, 1] | | | Input2: Tensor(Const: bias) | | | | | Input1: H * W \< 255 | | Equal | Input0: Tensor(Variable) | Output0: Tensor(Variable) | EQUAL | EqualOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | Exp | Input0: Tensor(Variable) | Output0: Tensor(Variable) | EXP | ExpOptions | None | | | Gather | Input0: Tensor(Variable) | Output0: Tensor(Variable) | GATHER | GatherOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: positions) | | | | | | | Greater | Input0: Tensor(Variable) | Output0: Tensor(Variable) | GREATER | GreaterOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | GreaterEqual | Input0: Tensor(Variable) | Output0: Tensor(Variable) | GREATER_EQUAL | GreaterEqualOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | LeakyRelu | Input0: Tensor(Variable) | Output0: Tensor(Variable) | LEAKY_RELU | LeakyReluOptions | None | | | Less | Input0: Tensor(Variable) | Output0: Tensor(Variable) | LESS | LessOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | Log | Input0: Tensor(Variable) | Output0: Tensor(Variable) | LOG | None | None | Only supported by S.x. | | LogicalAnd | Input0: Tensor(Variable) | Output0: Tensor(Variable) | LOGICAL_AND | LogicalAndOptions | None | | | | Input1: Tensor(Variable/Const) | | | | | | | Logistic | Input0: Tensor(Variable) | Output0: Tensor(Variable) | LOGISTIC | None | None | | | Maximum | Input0: Tensor(Variable) | Output0: Tensor(Variable) | MAXIMUM | MaximumMinimumOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | MaxPool | Input0: Tensor(Variable) | Output0: Tensor(Variable) | MAX_POOL_2D | Pool2DOptions | None | Input and output have the same quantization range. | | Mean | Input0: Tensor(Variable) | Output0: Tensor(Variable) | MEAN | None | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: axis) | | | | | Input1 only supports [1, 2]. | | Minimum | Input0: Tensor(Variable) | Output0: Tensor(Variable) | MINIMUM | MaximumMinimumOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | Mul | Input0: Tensor(Variable) | Output0: Tensor(Variable) | MUL | MulOptions | None | | | | Input1: Tensor(Variable/Const) | | | | | | | Neg | Input0: Tensor(Variable) | Output0: Tensor(Variable) | NEG | NegOptions | | | | NotEqual | Input0: Tensor(Variable) | Output0: Tensor(Variable) | NOT_EQUAL | NotEqualOptions | None | Input and output have the same quantization range. | | | Input1: Tensor(Variable/Const) | | | | | | | Pack | Input(0 ~ N): Tensor(Variable) | Output0: Tensor(Variable) | PACK | PackOptions | None | Input and output have the same quantization range. | | Pad | Input0: Tensor(Variable) | Output0: Tensor(Variable) | PAD | PadOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: paddings) | | | | | | | Prelu | Input0: Tensor(Variable) | Output0: Tensor(Variable) | PRELU | None | None | | | | Input1: Tensor(Const: slope) | | | | | | | ReduceMax | Input0: Tensor(Variable) | Output0: Tensor(Variable) | REDUCE_MAX | None | None | | | | Input1: Tensor(Const: indices) | | | | | | | Relu | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RELU | None | None | | | Relu6 | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RELU6 | None | None | | | ReluN1To1 | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RELU_N1_TO_1 | None | None | | | Reshape | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RESHAPE | ReshapeOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: shape) | | | | | | | ResizeBilinear | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RESIZE_BILINEAR | ResizeBilinearOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: H, W size) | | | | | | | ResizeNearestNeighbor | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RESIZE_NEAREST_NEIGHBOR | ResizeNearestNeighborOptions | None | Not supported by 1.x. | | | Input1: Tensor(Const: H, W size) | | | | | | | Round | Input0: Tensor(Variable) | Output0: Tensor(Variable) | ROUND | None | None | | | Rsqrt | Input0: Tensor(Variable) | Output0: Tensor(Variable) | RSQRT | None | None | | | Slice | Input0: Tensor(Variable) | Output0: Tensor(Variable) | SLICE | SliceOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: begin) | | | | | | | | Input2: Tensor(Const: size) | | | | | | | Softmax | Input0: Tensor(Variable) | Output0: Tensor(Variable) | SOFTMAX | SoftmaxOptions | None | To perform operations on a specified dimension, you need to transpose the dimension to be calculated to the last dimension (innermost dimension). | | Split | Input0: Scalar(Const: axis) | Output0: Tensor(Variable) | SPLIT | SplitOptions | None | Input1 and Output0 have the same quantization range. | | | Input1: Tensor(Variable) | | | | | | | SplitV | Input0: Tensor(Variable) | Output0: Tensor(Variable) | SPLITV | SplitVOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Scalar(Const: num_or_size_splits) | | | | | | | | Input2: Scalar(Const: axis) | | | | | | | Sqrt | Input0: Tensor(Variable) | Output0: Tensor(Variable) | SQRT | None | None | | | StridedSlice | Input0: Tensor(Variable) | Output0: Tensor(Variable) | STRIDED_SLICE | StridedSliceOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: begin) | | | | | | | | Input2: Tensor(Const: end) | | | | | | | | Input3: Tensor(Const: strides) | | | | | | | Sub | Input0: Tensor(Variable) | Output0: Tensor(Variable) | SUB | SubOptions | None | If Input1 is Const, the value type will become INT32 after quantization. | | | Input1: Tensor(Variable/Const) | | | | | | | Sum | Input0: Tensor(Variable) | Output0: Tensor(Variable) | SUM | None | None | | | | Input1: Tensor(Const: indices) | | | | | | | Tanh | Input0: Tensor(Variable) | Output0: Tensor(Variable) | TANH | None | None | | | Tile | Input0: Tensor(Variable) | Output0: Tensor(Variable) | TILE | TileOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const: multiples) | | | | | | | Transpose | Input0: Tensor(Variable) | Output0: Tensor(Variable) | TRANSPOSE | TransposeOptions | None | Input0 and Output0 have the same quantization range. | | | Input1: Tensor(Const) | | | | | | | Unpack | Input0: Tensor(Variable) | Output(0 ~ N): Tensor(Variable) | UNPACK | UnpackOptions | None | | | TFLite_Detection_NMS | Input(0 ~ 6): Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | TFLite_Detection_NMS | The number of inputs/outputs is related to the definition of cus_options: | | | | Output1: Tensor(Variable) | | | | cus_options = | | | | Output2: Tensor(Variable) | | | | [(b"input_coordinate_x1",0,"int"), | | | | Output3: Scalar(Variable) | | | | (b"input_coordinate_y1",1,"int"), | | | | Output4: Tensor(Variable, optional) | | | | (b"input_coordinate_x2",2,"int"), | | | | | | | | (b"input_coordinate_y2",3,"int"), | | | | | | | | (b"input_class_idx",6,"int"), | | | | | | | | (b"input_score_idx",5,"int"), | | | | | | | | (b"input_confidence_idx",4,"int"), | | | | | | | | (b"input_facecoordinate_idx",-1,"int"), | | | | | | | | (b"output_detection_boxes_idx",0,"int"), | | | | | | | | (b"output_detection_classes_idx",1,"int"), | | | | | | | | (b"output_detection_scores_idx",2,"int"), | | | | | | | | (b"output_num_detection_idx",3,"int"), | | | | | | | | (b"output_detection_boxes_index_idx",-1,"int"), | | | | | | | | (b"nms",0,"float"), | | | | | | | | (b"clip",1,"float"), | | | | | | | | (b"max_detections",100,"int"), | | | | | | | | (b"max_classes_per_detection",1,"int"), | | | | | | | | (b"detections_per_class",1,"int"), | | | | | | | | (b"num_classes",80,"int"), | | | | | | | | (b"bmax_score",1,"int"), | | | | | | | | (b"num_classes_with_background",1,"int"), | | | | | | | | (b"nms_score_threshold",0.005,"float"), | | | | | | | | (b"nms_iou_threshold",0.45,"float")] | | PostProcess_Unpack | Input0: Tensor(Variable) | Output(0 ~ 6): Tensor(Variable) | CUSTOM | None | PostProcess_Unpack | The number of outputs is related to the definition of cus_options: | | | | | | | | cus_options = | | | | | | | | [(b"x_offset",0,"int"), | | | | | | | | (b"x_lengh",1,"int"), | | | | | | | | (b"y_offset",1,"int"), | | | | | | | | (b"y_lengh",1,"int"), | | | | | | | | (b"w_offset",2,"int"), | | | | | | | | (b"w_lengh",1,"int"), | | | | | | | | (b"h_offset",3,"int"), | | | | | | | | (b"h_lengh",1,"int"), | | | | | | | | (b"confidence_offset",4,"int"), | | | | | | | | (b"confidence_lengh",1,"int"), | | | | | | | | (b"scores_offset",5,"int"), | | | | | | | | (b"scores_lengh",80,"int"), | | | | | | | | (b"max_score",1,"int")] | | PostProcess_Max | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | PostProcess_Max | cus_options = | | | | Output1: Tensor(Variable) | | | | [(b"scores_lengh",90,"int"), | | | | | | | | (b"skip",1,"int")] | | RoiPooling | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | RoiPooling | cus_options = | | | Input1: Tensor(Variable: rois) | | | | | [(b"spatial_scale",0.0625,"float")] | | Reciprocal | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | Reciprocal | Find the reciprocol. Only supports input range [0, 8]. | | Atan2 | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | Atan2 | The input and output need to be 16-bit quantized. | | | Input1: Tensor(Variable) | | | | | Input range is [-32767, 32767]. | | | | | | | | Output range is [-π, π]. | | PhaseModify | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | PhaseModify | if Input0(x, y) \> Input1[0]: | | | Input1: Tensor(Const: [1, 4]) | | | | | Output0(x, y) = Input0(x, y) + Input1[1] | | | | | | | | else if Input0(x, y) \< Input1[2]: | | | | | | | | Output0(x, y) = Input0(x, y) + Input1[3] | | | | | | | | else: | | | | | | | | Output(x, y) = Input0(x, y) | | CondLess | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | CondLess | if Input0(x, y) \< Input1: | | | Input1: Scalar(Variable/Const) | | | | | Output0(x, y) = Input3 | | | Input2: Tensor(Variable/Const) | | | | | else: | | | Input3: Scalar(Variable/Const) | | | | | Output(x, y) = Input2(x, y) | | CondGreat | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | CondGreat | if Input0(x, y) \> Input1: | | | Input1: Scalar(Variable/Const) | | | | | Output0(x, y) = Input3 | | | Input2: Tensor(Variable/Const) | | | | | else: | | | Input3: Scalar(Variable/Const) | | | | | Output(x, y) = Input2(x, y) | | CustomNotEqual | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | CustomNotEqual | if Input0(x, y) != Input1: | | | Input1: Scalar(Const) | | | | | Output0(x, y) = Input2(x, y) | | | Input2: Tensor(Variable) | | | | | else: | | | | | | | | Output0(x, y) = Input0(x, y) | | Clip | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | Clip | if Input0(x, y) \> Input2: | | | Input1: Scalar(Const) | | | | | Output0(x, y) = Input2 | | | Input2: Scalar(Const) | | | | | else if Input0(x, y) \< Input1: | | | | | | | | Output0(x, y) = Input1 | | | | | | | | else: | | | | | | | | Output(x, y) = Input0(x, y) | | CustomFloorMod | Input0: Tensor(Variable) | Output0: Tensor(Variable) | CUSTOM | None | CustomFloorMod | For points near 0, the fixed-point result will be all 0. | | | Input1: Scalar(Const) | | | | | |

Operator Description¶

Operators with BuiltinOperator other than CUSTOM are standard-defined operators, rather than custom operators. These standard-defined operators have the same behavior as the operators in TensorFlow. BuiltinOptions provides options to configure properties for BuiltinOperator, for example padding, stride and dilation in Conv2D operator. BuiltinOptions is located in the directory SGS_IPU_SDK/Scripts/ConvertTool/third_party/tflite. If you want to set the properties of a specific BuiltinOperator, you can check the associated BuiltinOptions to see what options are available for configuration.

Operators with BuiltinOperator marked as CUSTOM are not standard-defined operators, and therefore do not come with any BuiltinOptions. You need to configure cus_options first, and then call the API to write the configuration into the model. The field of cus_options which needs to be configured has been pre-defined, so you can simply modify the value of the parameter corresponding to the cus_options in the table above. Custom operators without cus_options do not require any property configuration.

The input and output of AvgPool, Conv2D, DepthwiseConv2D, and MaxPool support only shapes in NHWC format. For Concatenation, Gather, Pack, Pad, Reshape, Slice, Split, SplitV, StridedSlice, Tile, Transpose, and Unpack, the input and output shapes support up to 10 dimensions. For other operators, a maximum of 4 dimensions is supported.

TFLite_Detection_NMS, PostProcess_Unpack and PostProcess_Max are post-processing operators for network detection. Please refer to SGS Post-Processing Module for further details.

Operator Quantization¶

Since hardware cannot calculate the number of floating points, the data in the model should be converted to integer data to allow the calculation to be performed. SGS_IPU_SDK calculates the step size based on the maximum and minimum values of the Tensor, and maps the minimum to maximum interval to 0,255 or -32767, 32767.

In Calibrator, there is a detailed introduction to neural network model statistics and automatic conversion. Since the maximum and minimum values of the input/output of each operator will be readily available if you construct the model by yourself, you can use the following definition to specify the maximum/minimum value for each Tensor:

[

{

"name": "FeatureExtractor/MobilenetV1/Conv2d_0/weights",

"min": [-4.555312, -2.876907, -1.234419],

"max": [7.364561, 3.960804, 6.0],

"bit": 8

},

{...},

...

]

In the foregoing definition:

-

"name" [string] means the name of the Tensor.

-

"min" and "max" [list] are determined by the associated Tensor.

Only Tensor shapes in NHWC format are supported.

When specifying the min/max values of Input1 for Conv2D, please note that the min/max number of the Tensor is the number of Conv2D kernel. For example, for Conv2D Input1 with the shape [16, 5, 5, 3], you need to provide 16 min/max values. The 16 min/max values can be the same.

For the other Tensors, the min/max values to be provided should correspond to the number of innermost dimension of the Tensor. For example, if the shape of the Tensor is [1, 28, 28, 3], then you should provide 3 min/max values, and the 3 min/max values can be one and the same. -

"bit" [int] means the quantization bit width. The bit width currently supported includes 8, 16, and 32.

Input0 and Input1 of Conv2D and DepthwiseConv2D should use the same bit width. If 8-bit is specified, the range supported by Input0 is [0, 255], and the range supported by Input1 is [-127, 127]. If 16-bit is specified, the supported range is [-32767, 32767] for both Input0 and Input1. For Input2, only 16-bit is supported. For Output0, you need to decide whether it is 8-bit or 16-bit depending on the input of the next operator.

If Input1 of Add and Sub operators is Const, 32-bit should be specified.

For operators including Abs, Add, Mul, Neg, LeakyRelu, Prelu, Relu, Relu6, and ReluN1To1, the bit width for input and output can be different (for 8-bit, the range is [0, 255]; for 16-bit, the range is [-32767, 32767]). With the remaining operators, only 16-bit input/output is supported (within the range [-32767, 32767]).

Model Construction¶

To generate a model, you can compile the Python code to call the SigmaStar post-processing module. The SigmaStar post-processing module is located at SGS_IPU_SDK/Scripts/postprocess. This module uses a class of TFLitePostProcess to implement a set of APIs for generating TFLite Flatbuffer. You can use these APIs to create a Tensor, an Operator, or a Model.

SGS_IPU_SDK/Scripts/postprocess/TFLitePostProcess.py implements the TFLitePostProcess class, and SGS_IPU_SDK/Scripts/postprocess/postprocess.py implements the function to generate a model by specifying the Python file to be compiled. Therefore, you need only compile the generator_model.py required to create the model structure, and use the command below, to generate the model required:

python3 SGS_IPU_SDK/Scripts/postprocess/postprocess.py -n /path/to/generator_model.py

To facilitate use of the TFLitePostProcess class, you need to import the class and then create an instance of the TFLitePostProcess class when compiling the Python script. After that, you can create a model according to the method used in the instance:

from TFLitePostProcess import * from third_party import tflite sgs_builder = TFLitePostProcess() |

The steps to create a model are as follows:

-

Create Buffer.Buffer, to save the variable or constant data.

-

Create Tensor.Tensor, to encapsulate the Buffer with attributes such as name, shape, datatype, etc. provided to make Buffer description job more convenient. Since the output of the previous Operator uses the same Tensor as the input of the next Operator, Tensor can be regarded as a bridge between one Operator and the Operator next to it.

-

Create Operators. Different Operator has different calculation requirements. By combining the required Operators, you can then complete the algorithm to be constructed.

-

Create SubGraph and Model. The algorithms completed by the various Operators will be collected in one SubGraph. Currently there can be only one Subgraph in one Model.

Creating a Tensor¶

Creating a Variable Tensor

sgs_builder.buildTensor(shape: list, name: str)

This API allows you to create a Variable Tensor. With this API, a Variable Buffer will be created automatically.

Note: The Tensor names cannot be duplicated. Besides, only Tensor shapes in NHWC format are supported.

Creating a Const Tensor

Const Tensor refers to Tensor containing data. When a Const Tensor is created, the data will be saved in the model. To create a Const Tensor, serialize the data using the Python standard library struct, then create a Buffer for these data. After that, create the Tensor. The following is an example to illustrate how to create a Const Tensor with data -1 and shape [1]:

muln1_vector = []

muln1_value = [-1]

for value in muln1_value:

muln1_vector += bytearray(struct.pack("f", value))

sgs_builder.buildBuffer("mul_n1", muln1_vector)

sgs_builder.buildTensor([1], "mul_n1", sgs_builder.getBufferByName("mul_n1"), tflite.TensorType.TensorType().FLOAT32) |

sgs_builder.buildBuffer(name: str, buffer_data: bytearray)

The method for creating a Variable Tensor is the same as the one for creating a Const Tensor, only you have to add two more parameters to specify Buffer and DataType, respectively. Be sure to keep the Tensor name in mind, since you will need to specify the Tensor name created when creating an Operator.

Creating an Operator¶

Before creating an Operator, you need to create the input/output Tensor of the Operator, and then employ either of the following two methods to create the Operator:

sgs_builder.buildOperatorCode(opcode_name: str, builtin_code: tflite.BuiltinOperator, custom_code: str) sgs_builder.buildOperator(opcode_name: str, input_names: list, output_names: list, builtin_options_type: tflite.BuiltinOptions, builtin_options: tflite.BuiltinOptions.BuiltinOptions, custom_options: bytearray)

The opcode_name is used to provide a name for the Operator to be created. The names of the Operators cannot be duplicated. For details on builtin_code and BuiltinOptions, please refer to the BuiltinOperator.custom_code and CustomCode.builtin_options_type in the Operator Description section, respectively.

Creating BuiltinOptions

Operators with BuiltinOperator other than CUSTOM are standard-defined operators. BuiltinOptions provides options to configure the properties of the associated BuiltinOperator. In the following, we will illustrate how to create the Options of the Split operator.

Here are the contents of the SGS_IPU_SDK/Scripts/ConvertTool/third_party/tflite/SplitOptions.py file:

# automatically generated by the FlatBuffers compiler, do not modify

# namespace: tflite

from third_party.python import flatbuffers

class SplitOptions(object):

__slots__ = ['_tab']

@classmethod

def GetRootAsSplitOptions(cls, buf, offset):

n = flatbuffers.encode.Get(flatbuffers.packer.uoffset, buf, offset)

x = SplitOptions()

x.Init(buf, n + offset)

return x

# SplitOptions

def Init(self, buf, pos):

self._tab = flatbuffers.table.Table(buf, pos)

# SplitOptions

def NumSplits(self):

o = flatbuffers.number_types.UOffsetTFlags.py_type(self._tab.Offset(4))

if o != 0:

return self._tab.Get(flatbuffers.number_types.Int32Flags, o + self._tab.Pos)

return 0

def SplitOptionsStart(builder): builder.StartObject(1)

def SplitOptionsAddNumSplits(builder, numSplits): builder.PrependInt32Slot(0, numSplits, 0)

def SplitOptionsEnd(builder): return builder.EndObject()

From the definition of the penultimate function, you can see that the Split operator has only one Option: NumSplit, whose value type is INT32.

By calling the last three functions in sequence and getting the return value of the last function, you can generate the Options of the Split operator, which you can encapsulate into a function call:

def createSplitOptions(sgs_builder, numSplits):

SplitOptionsStart(sgs_builder)

SplitOptionsAddNumSplits(sgs_builder, numSplits)

split_options = SplitOptionsEnd(sgs_builder)

return split_options

In actual use, you need only import the instance of the TFLitePostProcess class and the data with INT32 value type to generate the Options of the Split operator.

In the definition of SGS_IPU_SDK/Scripts/postprocess/TFLitePostProcess.py, frequently used Options are already encapsulated as demonstrated above. You can directly call the TFLitePostProcess class to get the defined Options, or add other Operators to the TFLitePostProcess class to define their Options according to the above rule.

Creating Custom Options

For operators with BuiltinOperator marked as CUSTOM, the field of cus_options which needs to be configured has been pre-defined. You can simply modify the value of the parameter corresponding to the cus_options in the table above, and then create the Options using the following method:

options = sgs_builder.createFlexBuffer(cus_options)

Example

To create DepthwiseConv2D:

conv0_inputs = ['input0', 'weight0', 'bias0']

conv0_outputs = ['output0']

sgs_builder.buildOperatorCode("output0", tflite.BuiltinOperator.BuiltinOperator().DEPTHWISE_CONV_2D)

DepthWiseConv2DOptions = sgs_builder.createDepthWiseConv2DOptions(2, 1, 1, 0, 1, 1, 1, 1, 1, 1)

sgs_builder.buildOperator("output0", conv0_inputs, conv0_outputs, tflite.BuiltinOptions.BuiltinOptions().DepthwiseConv2DOptions, DepthWiseConv2DOptions)

To create RoiPooling:

roi0_inputs = ['input0', 'rois']

roi0_outputs = ['output0']

sgs_builder.buildOperatorCode("output0", tflite.BuiltinOperator.BuiltinOperator().CUSTOM, 'RoiPooling')

cus_options = [(b"spatial_scale", 0.0625, "float")]

RoipoolOptions = sgs_builder.createFlexBuffer(cus_options)

sgs_builder.buildOperator("output0", roi0_inputs, roi0_outputs, None, None, RoipoolOptions)

Note: When creating an Operator, the shape of the input and output Tensors should be specified according to the requirement of the Operator. If the shape of the input/output Tensor does not meet the requirement of the Operator, there is no guarantee that the result of the generated model is correct. Besides, an unexpected error might occur during the operation.

Creating a Model¶

Once the Tensor and Operator are created, you can use the following template to create the model:

sgs_builder.subgraphs.append(sgs_builder.buildSubGraph(model_config["input"], model_config['output'], model_config["name"])) sgs_builder.model = sgs_builder.createModel(3, sgs_builder.operator_codes, sgs_builder.subgraphs, model_config["name"], sgs_builder.buffers) file_identifier = b'TFL3' sgs_builder.builder.Finish(sgs_builder.model, file_identifier) buf = sgs_builder.builder.Output()

Then save the model in binary format in the buffer to generate the model file.

Defining min/max value of the Tensor¶

According to the description above, you need to specify min/max values that corresond to the number of the innermost dimension for each Tensor, then use pickle to save them in a file.

import_param = []

input_param = dict()

input_param['name'] = 'mul_n1'

input_param['bit'] = 16

input_param['min'] = [-1]

input_param['max'] = [-1]

import_param.append(input_param)

with open('import.pkl', 'wb') as f:

pickle.dump(import_param, f)

If the actual data is less than the min value, the result will be the min value, and if the actual data is greater than the max value, the result will be the max value.

Example of Model Construction¶

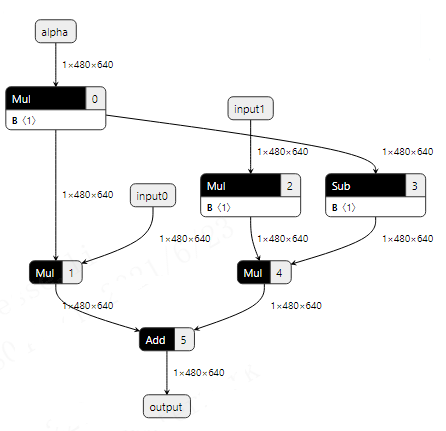

In the following example, we will illustrate the method to construct a model using the algorithm set forth below:

As shown in the table in Operator Summary section, the second input of Add, Mul and Sub operators supports only Const. Moreover, to prevent alpha input0 from exceeding the limit of IN16, you need to normalize alpha to [0,1]. The above equation can be equivalently converted into the following form:

To prevent overflow, we only implement the following part: \text{output} = \frac{\alpha}{128} \bullet \text{input}0 + \text{input}1 \bullet ( - 1) \bullet (\frac{\alpha}{128} - 1):

import numpy as np

from TFLitePostProcess import *

from anchor_param import *

from third_party import tflite

import math

import pickle

def gen_import_param(model_config):

import_param = []

for name in [*model_config['input'], model_config['output'][0], 'mul0', 'mul1', 'mul2']:

input_param = dict()

input_param['name'] = name

input_param['bit'] = 16

input_param['min'] = [-32767] * model_config['input_shape'][0][-1]

input_param['max'] = [32767] * model_config['input_shape'][0][-1]

import_param.append(input_param)

for name in ['mul_alpha', 'sub0']:

input_param = dict()

input_param['name'] = name

input_param['bit'] = 16

input_param['min'] = [0] * model_config['input_shape'][0][-1]

input_param['max'] = [1] * model_config['input_shape'][0][-1]

import_param.append(input_param)

input_param = dict()

input_param['name'] = 'mul_n1'

input_param['bit'] = 16

input_param['min'] = [-1]

input_param['max'] = [-1]

import_param.append(input_param)

input_param = dict()

input_param['name'] = 'sub_1'

input_param['bit'] = 32

input_param['min'] = [1]

input_param['max'] = [1]

import_param.append(input_param)

input_param = dict()

input_param['name'] = 'mul_f'

input_param['bit'] = 16

input_param['min'] = [0.0078125]

input_param['max'] = [0.0078125]

import_param.append(input_param)

with open('import.pkl', 'wb') as f:

pickle.dump(import_param, f)

def buildGraph(sgs_builder, model_config):

input_shape = model_config['input_shape'][0]

sgs_builder.buildBuffer('NULL')#the 0th entry of this array must be an empty buffer (sentinel).

sgs_builder.buildTensor(input_shape, model_config["input"][0])

sgs_builder.buildTensor(input_shape, model_config["input"][1])

sgs_builder.buildTensor(input_shape, model_config["input"][2])

# Create Const Mul (-1)

muln1_vector = []

muln1_value = [-1]

for value in muln1_value:

muln1_vector += bytearray(struct.pack("f", value))

sgs_builder.buildBuffer("mul_n1", muln1_vector)

sgs_builder.buildTensor([1], "mul_n1", sgs_builder.getBufferByName("mul_n1"), tflite.TensorType.TensorType().FLOAT32)

# Create Const Mul (0.0078125) -> 1 / 128

mulf_vector = []

mulf_value = [0.0078125]

for value in mulf_value:

mulf_vector += bytearray(struct.pack("f", value))

sgs_builder.buildBuffer("mul_f", mulf_vector)

sgs_builder.buildTensor([1], "mul_f", sgs_builder.getBufferByName("mul_f"), tflite.TensorType.TensorType().FLOAT32)

# Create Const Sub (1)

sub_vector = []

sub_value = [1]

for value in sub_value:

sub_vector += bytearray(struct.pack("f", value))

sgs_builder.buildBuffer("sub_1", sub_vector)

sgs_builder.buildTensor([1], "sub_1", sgs_builder.getBufferByName("sub_1"), tflite.TensorType.TensorType().FLOAT32)

# Mul (model_config['input'][2], 0.0078125)

mul_inputs = [model_config['input'][2], 'mul_f']

sgs_builder.buildTensor(input_shape, 'mul_alpha')

mul_outputs = ['mul_alpha']

sgs_builder.buildOperatorCode('mul_alpha', tflite.BuiltinOperator.BuiltinOperator().MUL)

sgs_builder.buildOperator('mul_alpha', mul_inputs,mul_outputs,tflite.BuiltinOptions.BuiltinOptions().MulOptions,None)

# Mul (input0, mul_alpha)

mul0_inputs = [model_config['input'][0], 'mul_alpha']

sgs_builder.buildTensor(input_shape, "mul0")

mul0_outputs = ['mul0']

sgs_builder.buildOperatorCode("mul0", tflite.BuiltinOperator.BuiltinOperator().MUL)

sgs_builder.buildOperator("mul0", mul0_inputs,mul0_outputs,tflite.BuiltinOptions.BuiltinOptions().MulOptions,None)

# Mul (input1, -1)

mul1_inputs = [model_config["input"][1], 'mul_n1']

sgs_builder.buildTensor(input_shape, "mul1")

mul1_outputs = ['mul1']

sgs_builder.buildOperatorCode("mul1", tflite.BuiltinOperator.BuiltinOperator().MUL)

sgs_builder.buildOperator("mul1", mul1_inputs,mul1_outputs,tflite.BuiltinOptions.BuiltinOptions().MulOptions,None)

# Sub (mul_alpha, 1)

sub0_inputs = ['mul_alpha', 'sub_1']

sgs_builder.buildTensor(input_shape, 'sub0')

sub0_outputs = ['sub0']

sgs_builder.buildOperatorCode('sub0', tflite.BuiltinOperator.BuiltinOperator().SUB)

sgs_builder.buildOperator('sub0', sub0_inputs,sub0_outputs,tflite.BuiltinOptions.BuiltinOptions().SubOptions,None)

# Mul (mul1, sub0)

mul2_inputs = ['mul1', 'sub0']

sgs_builder.buildTensor(input_shape, "mul2")

mul2_outputs = ['mul2']

sgs_builder.buildOperatorCode("mul2", tflite.BuiltinOperator.BuiltinOperator().MUL)

sgs_builder.buildOperator("mul2", mul2_inputs,mul2_outputs,tflite.BuiltinOptions.BuiltinOptions().MulOptions,None)

# Add (mul0, mul1)

add0_inputs = ['mul0', 'mul2']

sgs_builder.buildTensor(input_shape, model_config['output'][0])

add0_outputs = model_config['output']

sgs_builder.buildOperatorCode(model_config['output'][0], tflite.BuiltinOperator.BuiltinOperator().ADD)

sgs_builder.buildOperator(model_config['output'][0], add0_inputs,add0_outputs,tflite.BuiltinOptions.BuiltinOptions().AddOptions,None)

#creat SubGraph and Model

sgs_builder.subgraphs.append(sgs_builder.buildSubGraph(model_config["input"], model_config['output'], model_config["name"]))

sgs_builder.model = sgs_builder.createModel(3,sgs_builder.operator_codes,sgs_builder.subgraphs,model_config["name"],sgs_builder.buffers)

file_identifier = b'TFL3'

sgs_builder.builder.Finish(sgs_builder.model, file_identifier)

buf = sgs_builder.builder.Output()

return buf

def get_postprocess():

model_config = {"name": "alpha_blending",

"input" : ['input0', 'input1', 'alpha'],

"output": ['output'],

"input_shape" : [[1, 480, 640]],

"out_shapes" : [[1, 480, 640]]}

sgs_builder = TFLitePostProcess()

gen_import_param(model_config)

sgs_buf = buildGraph(sgs_builder, model_config)

outfilename = model_config["name"] + ".tflite"

with open(outfilename, 'wb') as f:

f.write(sgs_buf)

print("\nWell Done! " + outfilename + " generated!\n")

def model_postprocess():

return get_postprocess()

Note: Remember to define the model_postprocess function at the end, and call all the previous defintions, so that you can use SGS_IPU_SDK/Scripts/postprocess/postprocess.py to create the model.

Below is the input_config.ini file required to convert the model:

[INPUT_CONFIG] inputs=input0,input1,alpha; input_formats=RAWDATA_S16_NHWC,RAWDATA_S16_NHWC,RAWDATA_S16_NHWC; quantizations=FALSE,FALSE,FALSE; [OUTPUT_CONFIG] outputs=output; dequantizations=FALSE;

For the first use, you can generate the model first, then use SGS_Netron to open the model and check the sample code against the diagram.

After the model is generated, the model opened by SGS_Netron will look something like this:

The min/max values of the input and output of the model have been deliberately configured as [-32767,32767]. Since the step size calculated based on the configuration of [-32767,32767] is 1, the quantizations and dequantizations in the input_config.ini configuration file can both be set to FALSE, so that the action of dividing the model input by the step size and the action of multiplying the model output by the step size can be omitted.

In case it is not possible to set the min/max values of the input and output of the model to [-32767,32767], please evaluate the model input/output data requirements.

If the innermost dimension of the model input or output is not a multiple of 8, hardware will align the innermost dimension of the data to a multiple of 8. In this case, you need to skip those useless data. (Note: This happens only with version 1.x).

Model Conversion¶

Generating the Original Model¶

As described earlier, with the compiled Python script for building the model, you can use the following command to generate a model:

python3 SGS_IPU_SDK/Scripts/postprocess/postprocess.py -n /path/to/generator_model.py

Since the min/max values will be defined for each Tensor, an import.pkl file will also be generated. This file records the min/max values of each Tensor, and specifies which datatype needs to be quantized. Note that the parameter of these Tensors should strictly meet the requirements of the operators stated above.

Converting a Float Model¶

The tool for converting a float model is located at SGS_IPU_SDK/Scripts/ConvertTool/ConvertTool.py.

Since we use standard TFLite API to generate the model, we can use the TFLite model of the ConvertTool.py to perform the conversion.

python3 ~/SGS_IPU_SDK/Scripts/ConvertTool/ConvertTool.py tflite \ --model_file alpha_blending.tflite \ --input_config input_config.ini \ --quant_file import.pkl \ --output_file alpha_float.sim

A float model will be generated when the operation is done.

Converting a Fixed Model¶

For those float models which have imported quantization information, dataset quantization is not required to generate a fixed model. The tool for such models is located at SGS_IPU_SDK/Scripts/examples/sim_convert_fixed.py.

python3 ~/SGS_IPU_SDK/Scripts/examples/sim_convert_fixed.py \ -m alpha_float.sim \ --input_config input_config.ini

A fixed model will be generated when the operation is done.

If --output parameter is not specified, the fixed model will be generated in the same path as the float model, with the filename changed from float.sim to fixed.sim.

If --output parameter is specified, the fixed model will be generated in the path specified.

Note: Float models converted without importing the min/max info using the method previously described cannot use this tool for conversion. Quantization using quantization dataset is required. Please refer to Calibrator for details.

Converting an Offline Model¶

The tool for converting an offline model is located at SGS_IPU_SDK/Scripts/calibrator/compiler.py.

python3 ~/SGS_IPU_SDK/Scripts/calibrator/compiler.py \ -m alpha_fixed.sim

An offline model will be generated when the operation is done.

If --output parameter is not specified, the offline model will be generated in the same path as the fixed model, with the filename changed from fixed.sim to fixed.sim_sgsimg.img.

If --output parameter is specified, the offline model will be generated in the path specified.